Apples’ default camera app needs some work. It’s super optimized for taking photographs, but not for all the other things we use our cameras for.

Open the Photos app on your phone and you’ll see: Photographs, Screenshots and what I call Visual Notes. All-tough they’re super common, we rarely talk about the latter category. These images aren’t taken to capture beauty or a memory. Instead, they’re used to quickly save or communicate something. Examples are receipts, interesting objects, pages of books, pictures of screens. I call them visual notes and I take thousands of them.

Since the introduction of the smartphone, the creation of images has surged. We’re always equipped with high-quality cameras and poor keyboards, resulting in a world in which we work more with visuals. This changes what we use images for. We still take pictures of beautiful sunsets, but we now also take pictures of anything that’s vaguely interesting.

The strange thing is that the world's most used camera app, the one Apple has pre-installed on every iPhone, has yet to adapt to this new behavior. The app is still optimized for taking photographs, instead of notes. The only development I’ve seen in this direction is that Apple moved the camera button to the lock screen of the phone, so you can quickly access it. They didn’t realize there’s so much more than they could do to build for this new use case. All updates in the last few years in the camera app were to take better photos, not notes.

I have a couple of ideas that I have that could significantly improve this experience:

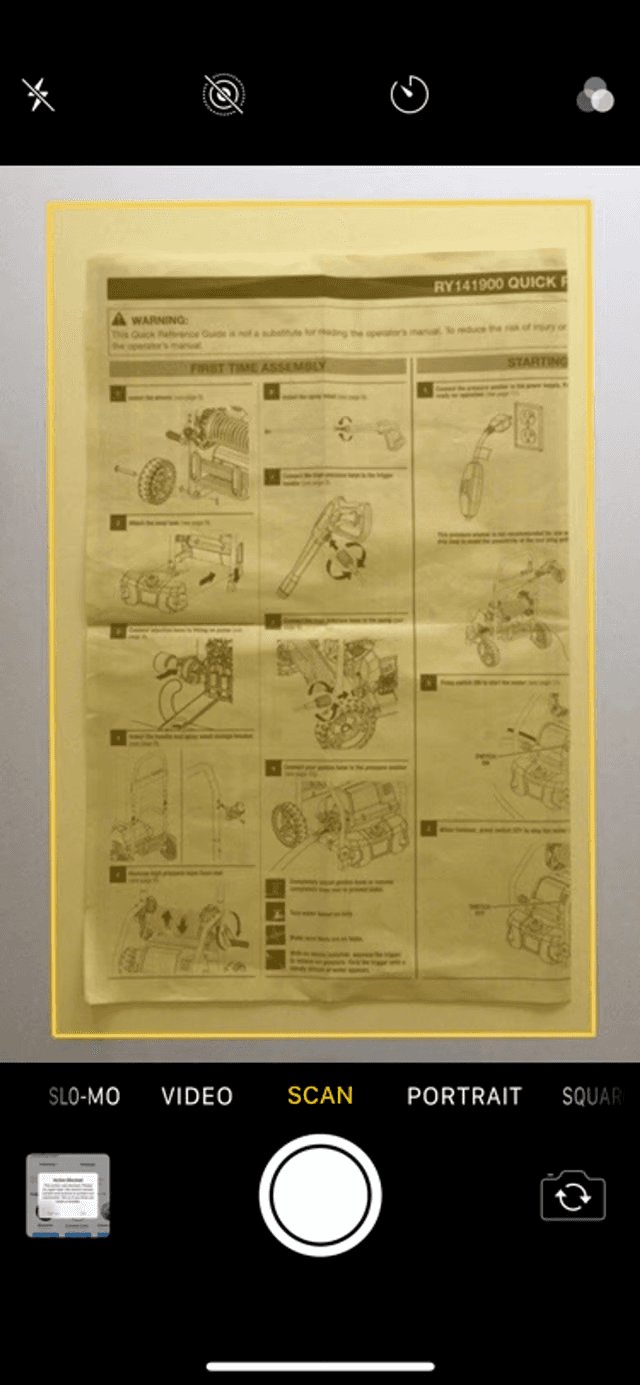

Scanning

We often use the camera to capture documents, notes, or other paper things we want to remember. While taking a photo works, scanning is much better. It can correct the strange angles, increase the contrast, and in theory, it can make the text selectable. It is possible to scan on iOS, but not in the camera app. It should be in there as well, it could simply be one of the camera modes.

Over time, scanning can turn into something like Google Lens, it could provide context over the objects you're scanning. For example: If you scan an article in the newspaper, it could bring up its respective web page.

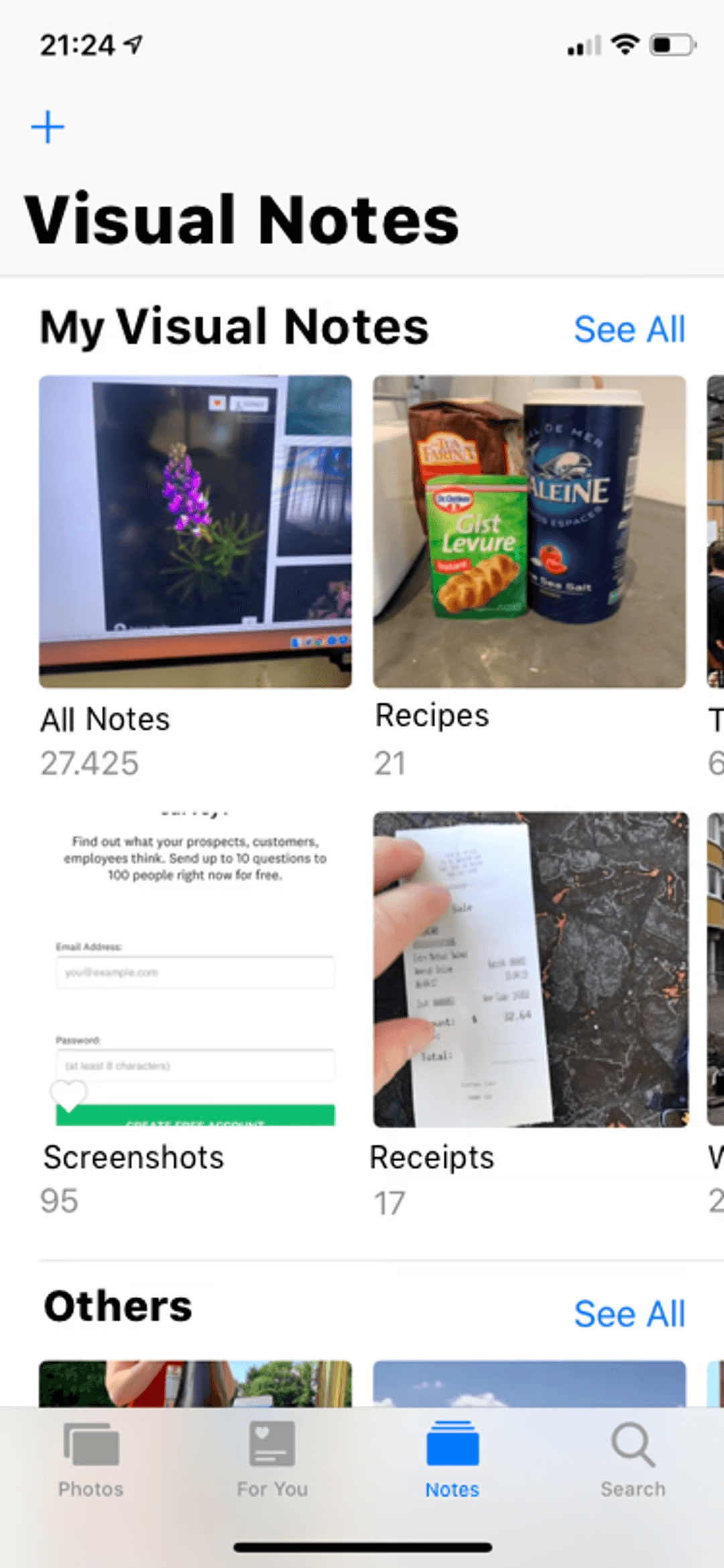

Creating a dedicated space for these images

Our photos app is filled up by these visual notes, weird pictures that serve a totally different purpose. They need their own space so that they don’t pollute our camera rolls. The camera should have a dedicated mode to quickly create these images or to tag them after the fact. They would then end up in a visual notes-app, together with screenshots, in which they can be organized. Good organization is key for this content because a lot of images can be a sort of reminder. It would be super valuable to add them to a visual to-do list, for example: Things I want to buy.Anything that needs organization also needs search. That could be a killer feature here as well. Being able to search for images based on their location, date and content can be very powerful.Over time, it’s should be possible to automatically detect these images using AI. There are a couple of properties that make them unique and that a computer can recognize:

They’re taken very quickly: contrary to photographs people only take one shot of the same thing.

They will very likely contain one object that is important

They rarely contain people

They will never be selfies

Quick markup

These images might need some context to be useful for someone (or yourself, later). Screenshots have a lovely markup feature that is very easy to access, and there should be something like this for visual notes too. These images are the screenshots of our physical world. It’s fascinating how digital behaviors can translate back to the physical world too.After you take visual note, it should automatically launch this markup tool so you can add context or emphasize what is important.

What’s next?

There are a ton of camera apps out there that incorporate some of this thinking, but those are all not accessible from the iPhone lock screen, which is crucial. You need to be able to access this quickly wherever you are. That’s why I think Apple should either allow external apps to be there or improve their one to be more about visual notes. The current app is based on an old idea of what the function of a camera is. Since the introduction of smartphones, these things have become so much more.

This article was originally published on Medium.